How do offline sites behave in the Avassa Edge Platform?

Offline sites. Can’t live with them, can’t live without them. Suppose you have systems running at thousands of locations, such as Edge AI applications, robot controllers, video analytics, point-of-sales systems, and other on-site applications. What would occur if your connectivity to the cloud were to fail? What if your cloud provider were subjected to a cyber-attack?

Could a single, centralized software upgrade failure cause all locations to go down?

At Avassa, we have since day 1 focused on providing an edge platform that guarantees that all your edge locations are fully autonomous.

[ Merriam-Webster Dictionary]

There is more to that than one might think. Autonomous edge sites are highly desired but quite complex to build on your own, with many subtle details that might make your edge application dependent on cloud APIs and control commands for restarts or migration.

At Avassa, we have designed our system to enable fully autonomous self-healing edge sites. Making this our primary design focus, we have been humbly surprised over the years to encounter edge solutions that struggle to provide the most fundamental requirements, such as a local fail-over to another host in the absence of cloud connectivity.

In this blog post, I will illustrate the technical features inherent in our platform that guarantee that your applications will provide the highest possible degree of availability in the event of various incidents.

An important aspect to remember when reading is that while sites must be autonomous, central orchestration is a hard requirement. In other words, the local edge site autonomy must never negatively impact the efficiency of central operations across thousands of edge sites.

Don’t worry, you can have both.

A closer look at Edge Clusters and local capabilities

On each host at the Avassa edge sites, install the Edge Enforcer. This small container contains everything necessary to run applications at the edge. The Edge Enforcer embeds all dependencies needed for operating the applications, including:

- An image repository: All application container images are cached and replicated.

- A secrets manager: All secrets that the application needs are distributed to the sites and securely managed by each Edge Enforcer. Secrets are also replicated.

In many other systems, the image and secrets manager components are external and sometimes located in the cloud, making it nearly impossible to achieve edge site autonomy. Furthermore, it’s crucial to note that states, images, and secrets are replicated among the Edge Enforcers on the site. This ensures that the Edge Enforcers are always ready to migrate applications during host failures.

The Edge Enforcers form a RAFT cluster and implement all control functionality locally at the site, allowing them to make local scheduling and self-healing decisions. The central component, our Control Tower, is never involved in the healing actions happening at the site. The Control Tower’s only job is to drive the deployments of new and upgraded applications.

Once you’ve deployed your applications to your sites, you can disconnect from the external world for long periods of time and rely entirely on the local clusters.

Offline sites: Examples of host failures at the edge site

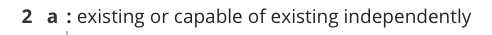

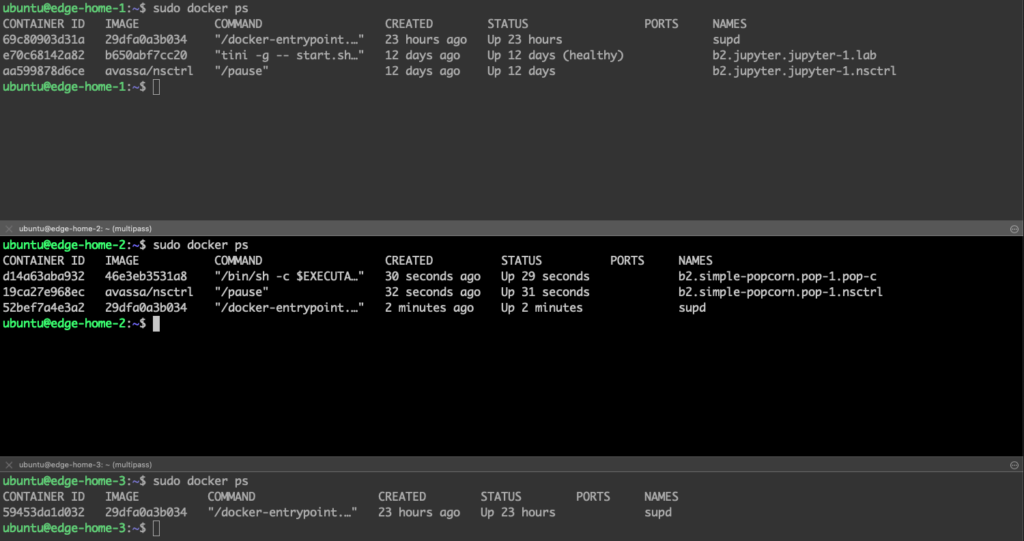

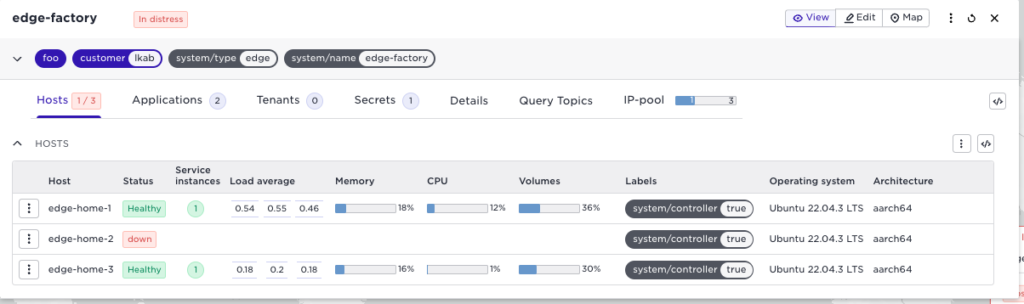

In this illustration, we will have the site edge-factory with three hosts edge-home-1, edge-home-2, and edge-home-3.

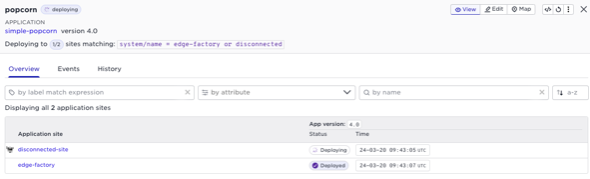

Let’s take a closer look at the application healing process on the edge site. Note that all action happens locally on the site, without interactions from the central Control Tower. The site does not have to contact external APIs or resources to migrate the workloads. I’ll use these examples to demonstrate what happens when the connectivity to the edge site is lost and an application fails. But before disconnecting, let us look at the state from the Control Tower. We can see a site edge-factory that has two applications running: a jupyter notebook and a demo application simple-popcorn:

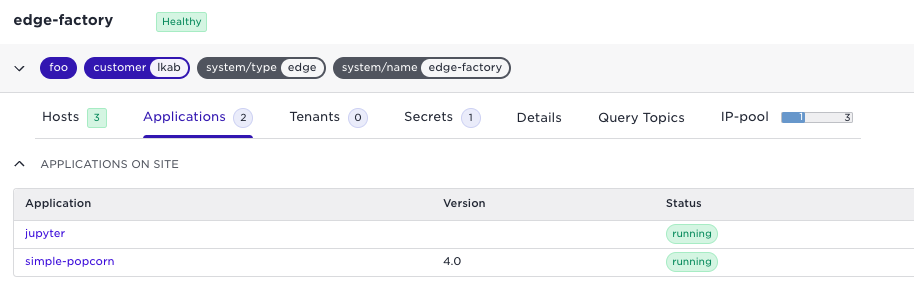

If we check the simple-popcorn application, we can see that it is running on edge-home-2

So, it’s time for the connectivity to fail!

From now on, the site will be disconnected, and we will only inspect and trigger faults locally.

Below, you can see where the containers are running, again the simple-popcorn is running on edge-home-2:

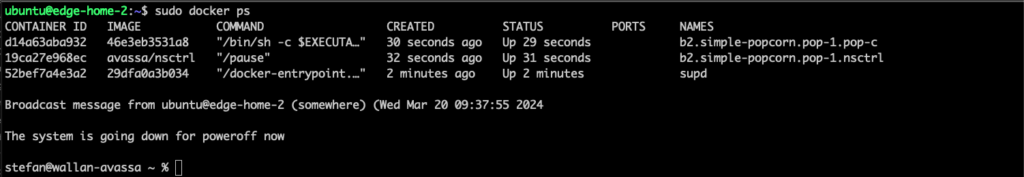

I am now stopping the host (note that the cluster is now both disconnected from the Control Tower and has one host down):

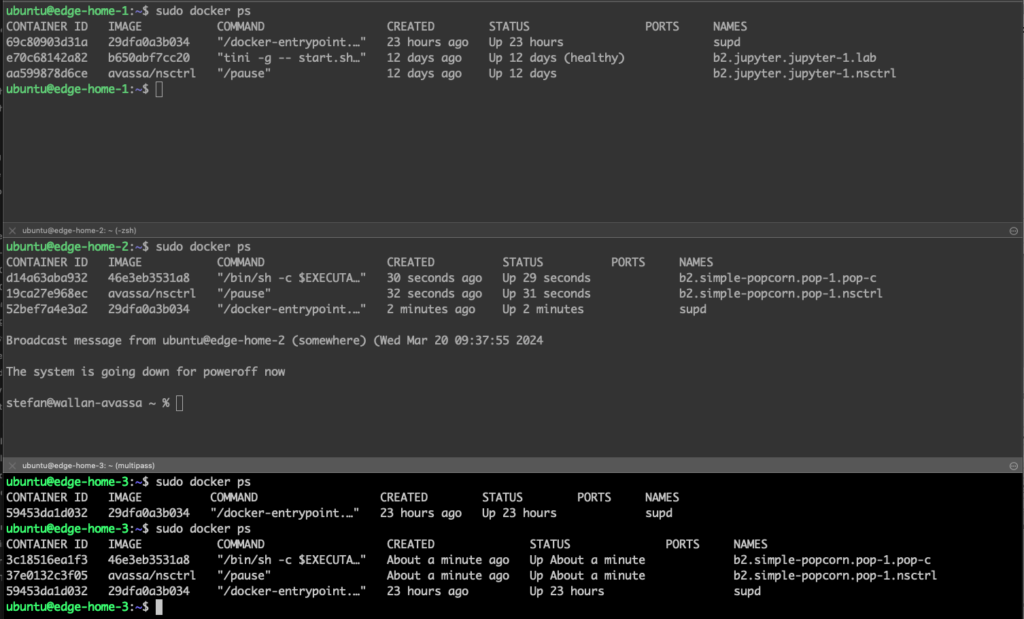

Within a minute, the popcorn application is migrated to edge-home-3:

The decisions were taken by the Edge Enforcers, and required images etc, was already replicated to edge-home-3.

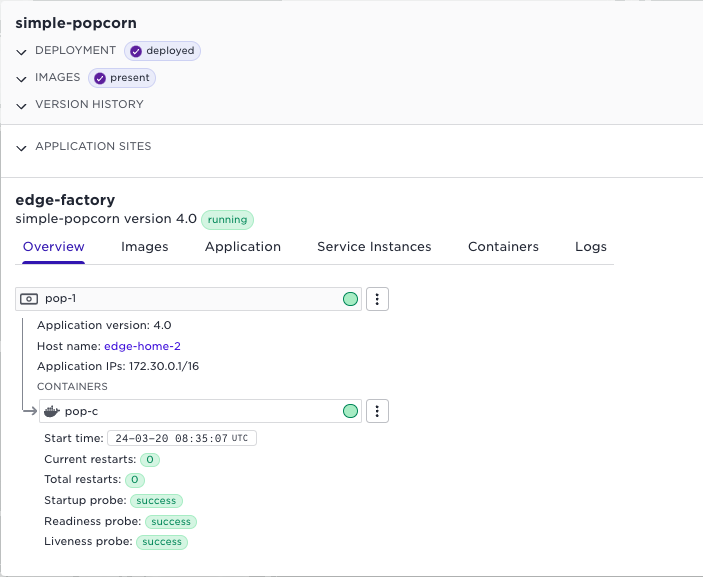

If we connect the site again, we can see the state in the Control Tower:

Application failures at the edge site

The same autonomy for edge sites applies to application-related issues. For instance, assume you have configured application probes. It’s important to note that the Avassa probes run locally at the site, testing the application response locally.

- If the startup or liveness probe fails, a local decision will be taken to restart the container.

- For the readiness probe, the site-local embedded DNS enables application availability. If you have an application with several replicas, the containers will run across hosts. The embedded DNS server will provide a round-robin call to “ready” containers for site-local client requests. If one of the containers is not responding OK👍 to the readiness probe, the DNS will only forward calls to the available containers.

Again, all of the above actions are implemented locally in the autonomous Edge Enforcers.

What about central modifications?

Let’s say that you have disconnected sites, and a new application deployment or upgrade is performed from the central Control Tower. What happens then? Disconnected sites should be viewed as being in a potentially long-lasting state rather than experiencing an error. Consider a situation where you have a new application for your fleet of trucks, robots, or stores. There’s a high probability that some of them will be disconnected at a given point in time. In the Avassa system, the deployment will persistently attempt to update the site as long as it is part of the deployment. All you need to do is wait until it reconnects, and everything will be fine.

What about local operations?

When the site is disconnected, it’s clear that you won’t be able to use the Control Tower UI or the Avassa command line from your central location for operational tasks on the disconnected site.

However, a site-local administrator can use the Avassa command line at the site.

You can display the status of all applications on the site and restart applications locally. But in specific scenarios it is essential to allow for local modifications. You might have a critical application configuration change or a new container version that must be deployed. The Avassa Edge Enforcer allows for those local modifications.

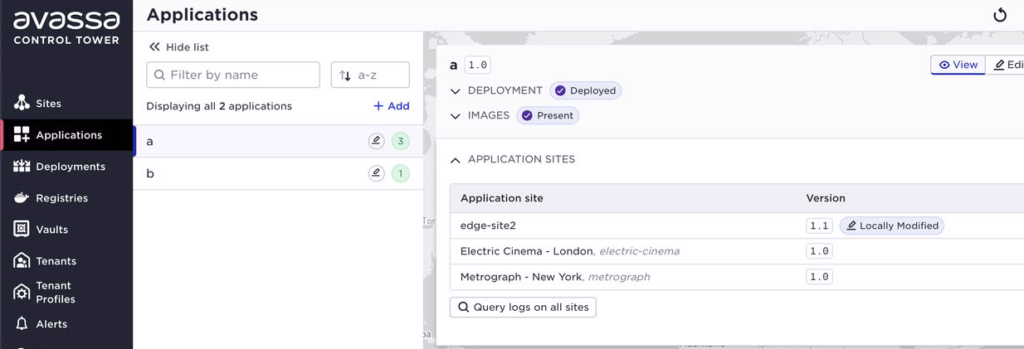

Modifications performed locally will have a flag in their state that they were locally modified. At a later point in time, when the connection is reestablished, this will be indicated in the Control Tower UI. In the example below, the application a and b has been modified on edge-site2:

You can read more on the local modifications in our Release Highlights from October 2023

As long as the central operations team does not perform any central modifications, the site’s local configuration stays. However, any later central changes will override the local modifications.

Telemetry data excellence in offline scenarios

The Avassa Edge Platform embeds an edge-native pub/sub bus. The platform utilizes it to collect host metrics and container logs and exchange commands between the Control Tower and the edges. Application developers also use it to enable a communication bus at the site.

The pub/sub bus manages any connection issues and caches any outgoing commands, for example.

When creating topics, a number of replicas can be specified. In a replicated topic, failover is handled automatically as the topic is available on multiple hosts.

The topic size is also essential when building for long offline scenarios; the topic size must match the expected offline time x the number of messages per time x the message size. With correct-sized topics and topic replication, no messages will be lost, neither in offline scenarios nor at failover.

Image downloads during uneven connectivity

Container images can, at times, be large. An application is often a combination of several images. And outages can occur in the middle of image downloads. The Avassa Edge Platform takes the following precautions to minimize the impact of network outages during downloads:

- The application will not start until all images are downloaded. If containers started as soon as the images were available, it might result in a stale application.

- Downloading only the changed container layers minimizes bandwidth usage, increasing the likelihood of successful downloads during short connection time slots.

- The Edge Enforcer can continue the download precisely when the disconnection occurred. It does not start the download all over again.

Security aspects in offline scenarios

A disconnected site/host can also indicate physical theft. Avassa has built-in features to protect the data on the host for these scenarios. In order for data and applications to be available on the host, it must be given an unseal key from the Control Tower or neighbor Edge Enforcers when it boots. If not, no state or secrets are available.

Summary

The Avassa Edge Platform enables the highest possible application availability on the edge sites. Sites are autonomous and take all actions needed to self-heal locally. All artifacts required to reschedule applications on the site are cached and replicated amongst the Edge Enforcers.

This means that sites can be disconnected for weeks without having a negative impact on the applications if any issues or incidents occur. At the same time, the central Control Tower manages long-lived desired changes like application upgrades over disconnected sites. Furthermore, edge site administrators can perform local changes. These will be indicated centrally, and controlled reconciliation actions can be taken.

Benchmark Avassa with your current solution for the edge, which one gives you the highest degree of application availability?