Avassa for Edge AI

Where MLOps ends, and deployment-to-production begins. Maximize the benefits of your Edge AI initiative with automated, remote deployment and operations at scale. Purpose-built for agile, future-proofed AI model operations in distributed edge environments.

No fuzz. Just deploy, monitor, secure.

The field of Edge AI is growing at an impressively rapid pace. And for good reasons. Combining the potential of edge and AI can lead to unprecedented competitive advantage and data utilization. Today, enterprises need a comprehensive, simple, and secure way of operationalizing their containerized edge AI applications.

AI model deployment in distributed edge and on-premise environments comes with operational challenges. You must tackle heterogeneous edge infrastructure, discover and mount GPUs to your applications, automatically configure model serving end-points, and report model drift. MLOps tooling is great for designing and building your Edge AI application, but when it’s time to deploy, you need purpose-built tooling for that. Tooling that also supports managing many sites and devices, as well as optimized tactics for collecting and utilizing telemetry data. It’s also key to consider day two operational aspects, such as remote monitoring and troubleshooting capabilities.

Introducing Avassa for Edge AI.

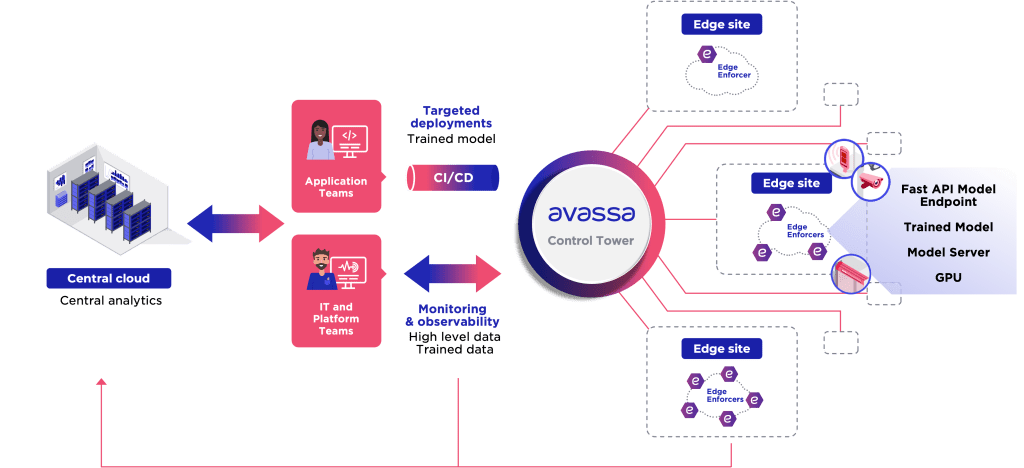

Avassa for Edge AI allows you to deploy, monitor, observe, and secure containerized AI models and applications to your edge using the Avassa Edge Platform. We empower you to develop, test, and release versions with unprecedented speed, no matter the number of locations. That way, Avassa fills the missing gap of addressing the crucial step of deployment and operationalizing after your model development and build process.

Automation accelerates feature implementation and deployment to the edge, enabling you to stay ahead in a rapidly evolving technological landscape and reach your full potential of Edge AI operations. Avassa will let you automate the complete model deployment phase, including configuring model serving endpoints and deploying the complete set of containers needed for the solution, such as API servers, data flow components, etc.

By utilizing standardized container technology, you will also benefit from the reuse of mainstream CI/CD and container tooling and competence. By deploying your trained model as a container running at the edge, you will also benefit from embedding all dependencies in one atom, making sure your trained model will behave as expected.

Combined with MLOps tooling of your choice, Avassa for Edge AI unleashes the power of Edge AI. It’s your gateway to seamless deployment and operation of distributed on-site Edge AI applications and trained models.

free download

Case Description: Avassa for Edge AI

In this case, we’ll look at a case description for the presceding challenges, key drivers, and accomplished benefits of an implementation of Avassa for Edge AI. An equipment provider for production lines embarked on a quest to revolutionize their operations through automation.

Features of Avassa for Edge AI

- Automated deployment of Edge AI models and applications. Newly trained models can easily be updated at scale.

- Automatic deployment and configuration of model serving endpoints. Configure ingress networking on the site and deploy any needed API components (e.g.FastAPI).

- Automatic discovery and management of GPUs and devices/sensors. With several hosts at each edge site, easily discover GPUs and external devices like cameras and sensors. The components that require these features will be automatically placed on the corresponding host.

- Complete shrink-wrapped solution for your AI model and edge applications. Most AI models live together with other containers at the edge. Avassa provides a complete solution for managing the them all.

- Application resilience offline. Fault-tolerant clustering of business-critical edge AI applications, independent of internet connectivity. The Avassa edge clusters are fully autonomous and your Edge AI application will survive in case of local failures.

- Edge native telemetry bus. We provide an embedded telemetry bus running at each edge. It simplifies the collection of sensor data and application development efforts to collect, filter, enrich, and aggregate data.

- Observability for your application and site health respectively. Get real-time insight into the health of the deployed Edge AI applications with built-in tools for trigger model drift thresholds.

- Intrinsic security for sensitive edge data. Edge hosts might be stolen and networks can be sniffed. The Avassa platform protects all application data and network traffic.

Edge AI Solution Blueprint

An efficient Edge AI toolkit should be designed to make the lifecycle management of applications and models a breeze. An Edge AI toolkit should contain:

- Your favorite AI/ML software toolkit

- An automated CI/CD pipeline to build the AI models as containers

- Edge infrastructure that won’t hold you back, and the good thing is that you can pick your favourite software and edge infrastructure for the above

- An edge container orchestration solution to manage the edge sites and automatically deploy model containers and components to the edge.

Yes, you guessed it: Avassa for Edge AI.

With the Avassa platform you deploy you Edge AI Applications and models to distributed sites. The sites can have heteregenous infrastructure; mix of Intel and ARM and different capabilities. The Edge Enforcer will then manage your Edge Applications at each edge site and schedule on hosts with the relevant capabilities, GPUs and devices. On sites with several hosts, the Edge Enforcer can make sure your Edge AI application can self heal even without connectivity to the central cloud.

Build intelligent edge applications based on composition of containers for various purposes like protocol adaptors, ML libraries, data analytics etc. The Edge Enforcer also enables edge site telemetry management , local storage and processing. It also manages forward of enriched and filtered data to the cloud including edge caching and managing of connectivity issues.

Give it a go! Request a free trial today to start using Avassa for Edge AI on you own Edge AI applications and models.

Avassa for Edge AI Demos

In this short demo, we show how to lifecycle manage Edge AI models at the edge.

Solution walkthrough

GPU management

Resources

Packaging and deploying an ML serving system to the edge

Published on:October 17, 2023There once was a lifecycle of a machine learning servable on the edge… The rapid uptake of applied machine learning across many tasks and industries is largely…

How to trace Edge Applications with OpenTelemetry in the Avassa Edge Platform

Published on:September 21, 2023Edge sites often have a set of communicating applications. An end-user transaction on the site results in a sequence of calls between the edge applications. Response times…

Deploying HiveMQ Edge in multiple sites

Published on:September 1, 2023Edge computing and IoT have a happy marriage. IoT focuses on devices at the edge and data collection. Edge computing enables local processing of that data. There…

Avassa in NVIDIA Inception

We are proud members of NVIDIAs Inception Program. Learn more about the program here.