Edge Computing: 5 Years of Navigating a Category In the Making

The first five years of Avassa have been anything but predictable, because the edge computing market hasn’t been very predictable itself. When we started back in 2020, there was no clear category and very few real-world deployments to learn from. What we did have was a conviction: that edge computing wasn’t just cloud-in-a-smaller-box, and that applying traditional tools to completely new characteristics would fail. So we went a different way.

This story is told through two lenses: the one of our Head of Product, Stefan Wallin, and the one of our Head of Marketing, Amy Simonson. One focused on building a platform for the realities of the edge. The other on shaping the language of a category still being defined. Together, these voices reflect how we’ve navigated ambiguity with purpose, built trust through clarity, and stayed opinionated in a space where some things are still up for grabs.

Head of Product

Head of Marketing

An Opinionated Approach: Problem First, Solution Second

From a Head of Product

When looking at edge computing infrastructure, you’ll find familiar components: applications, containers, Linux, local networking, and conclude, “Ah, that’s Kubernetes.” Problem solved? Well, not quite.

That assumption skips over the very challenges that define the edge: thousands of distributed locations, limited compute capacity, segmented local networks, intermittent connectivity, no on-site IT staff, no perimeter security, and operational costs that scale linearly with the number of sites. Applying cloud-native tools without rethinking the edge problem leads to fragile, high-touch deployments.

We had to start with the problem itself:

How do you orchestrate workloads across thousands of locations with full automation, offline capabilities, and minimal operational overhead?

This led us to two core design decisions:

- A lightweight edge agent, Edge Enforcer, embedding all necessary orchestration functions, built for offline-first operation and near-zero operational overhead.

- A scalable orchestrator, Control Tower, capable of managing hundreds of thousands of edge sites with full automation, designed from the ground up for the distributed edge.

Solving edge orchestration isn’t about repackaging mainstream tools, it’s about confronting the problem head-on. Only by understanding the operational constraints of real-world edge environments can you design a solution that works at scale, offline, and without constant human intervention. That’s why we didn’t start with Kubernetes. We started with the edge.

From a Head of Marketing

As a marketeer, my approach has always been rooted in the same principle that drives our product strategy: start with the problem. In edge computing, that’s not optional. It’s essential.

In these first five years, we’ve seen that many organizations aren’t yet at a stage where the “edge challenge” is fully visible to them. They haven’t scaled out enough, containerized enough, or connected the dots to realize they’re facing an application management problem. That’s what makes this space both demanding and deeply rewarding: we’re building the category while building the company.

Our marketing efforts hasn’t been about selling features off the rack. It’s been about having real conversations—with IT architects, platform teams, application developers and operations leads—about what happens when they try to run applications across tens, hundreds, and thousands of locations. Their challenges is what shaped our language. And often, we’ve helped them see problems they hadn’t named yet (or that they called something else, but more on that later) and how solving them unlocks entirely new capabilities.

Being opinionated in our solution design has been a strength in how we communicate. It gives us clarity. Instead of promising flexibility for every use case, we explain what edge orchestration should look like, and why that matters. That kind of conviction builds trust. And in an emerging category, trust is everything.

Building Trust in a Market Lacking the Comfort of Pioneers to Follow

From a Head of Product

What we’re seeing in edge computing deployments today simply didn’t exist five years ago when we began building our platform. At that time, the edge was more of a vision than an established market—with few real deployments and limited customer feedback to guide product development.

Designing a product “on paper,” without real-world validation, is risky. History is full of examples where such efforts missed the mark. So how did we approach building an edge orchestration platform in an early market? Several guiding principles helped shape a product that now puts us in a leading position.

1. Start with the hard problems.

We focused from the beginning on the most challenging aspects of the edge: massive distribution, automation without operators, offline-first behavior, and secure orchestration at scale. Solving these upfront gave us a foundation that scales with the market.

2. Rely on experience, but question everything.

Our core engineering team has deep experience solving non-trivial problems in distributed systems—scale, networking, security, automation. But we made it a priority to foster internal debate, question assumptions, and play devil’s advocate in design discussions. That culture of scrutiny has been key to our architecture’s strength.

3. Listen early—even if it’s imperfect.

Early adopters may not represent every edge challenge, but their feedback is invaluable. It shapes usability, hardening, documentation, and feature development. We also proactively engaged with non-customers, people with real opinions and edge needs, even if they weren’t ready to buy. Their input helped refine our direction.

4. Keep the end-goal in focus.

We treated our long-term vision as a lighthouse. It helped us prioritize features and architectural decisions, even when short-term needs were unclear. That discipline has paid off as edge computing matures into real-world deployments.

From a Head of Marketing

Effective marketing usually draws from three things: knowing your audience, applying creativity, and taking inspiration from others. That last one, the learning from others part, simply hasn’t been an option for us very often.

In these first five years, there’s been no clear playbook for edge management and operations. No dominant voice to follow. No well-defined category with success stories to point to. That forced us to build trust the hard way: through clarity, consistency, and honesty about what we know, and to be truthful about the things that the market is still figuring out.

It has also meant that our marketing couldn’t be passive. We had to be interesting, fun, and sometimes even a little provocative (I still don’t think Kubernetes at the edge makes sense). Just because we’re a B2B company, a highly technical one, or because we’re solving complex problems doesn’t mean we can’t get creative with messaging. If anything, it raises the bar. In a market this young, your story is your credibility.

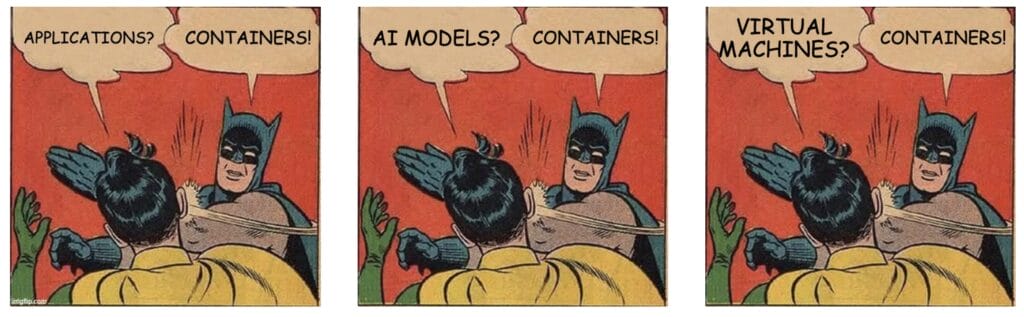

Learn from Batman. Containers first.

What’s made it worth it, and possible, is that everyone in this space is learning from each other. Customers, partners, and competitors are all navigating the same uncertainty. But I’m always striving for giving our messaging a unique tone: Confident, but kind. Visionary, but grounded. We’ve leaned into that. There’s no roadmap when you’re first. But that’s also the opportunity: to shape the conversation, set the expectations, and make our Edge Platform mean something to our users.

One of my personal favourites where we had some fun with it. Because it truly takes some people a little extra time to jump aboard the containerization train.

Which Edge Are We Talking About? A Taxonomy Soup

From a Head of Product

“Edge” is an overloaded term. Depending on who you ask, it might refer to service provider POPs, enterprise data centers, CDNs, wearable devices, or industrial sensors. And that creates hassle. The lack of a consistent taxonomy haven’t just created confusion, it has actively distorted the conversation.

Much of the industry still frames edge computing as an extension of the cloud, latency optimization at regional points of presence or revamped CDN infrastructure. But this cloud-centric view misses where the real operational challenges lie.

Today’s edge pressure comes from enterprises, like retailers, factories, and logistics operators. These organizations are pushing compute onto their own premises, deep within their networks, to increase resilience, ensure privacy, and handle volumes of data that can’t be shipped to the cloud. It’s not about proximity to the cloud; it’s about autonomy from it.

The taxonomy matters because it shapes how we approach the problem. When “edge” means cloud-adjacent POPs, you build for bandwidth. When “edge” means software running in every store, vehicle, and facility—you build for scale, heterogeneity, and offline autonomy. That’s the edge that needs orchestrating. This is not about building cloud-adjacent points of presence, it’s about transforming traditional on-prem environments into modern, automated edge infrastructures.

From a Head of Marketing

As a marketer, navigating the edge computing ecosystem has been both fascinating and, and frankly, at times a little bit chaotic. Especially way back in 2020. The taxonomy has been there but it’s far from universal and agreed upon. Every industry dresses up “the edge” in its own costume to fit the narrative of their respective industry: in retail, the edge is the physical store; in industry, it’s the factory floor; in automotive, it’s inside the vehicle.

It’s all edge, and non of it is edge.

This ambiguity could easily lead to vague messaging and diluted positioning. But we’ve tried to take the opposite route: be precise, be consistent, and make up our minds. We defined our edge early, and stuck to it. That clarity has been crucial in building a brand, a value proposition and a messaging platform.

Meanwhile, the common definitions of the edge is a continuous process of co-creation with customers, partners and the category as a whole. And that helps us sharpen the language and evolve how we describe what we do. But the foundation remains: we focus on the on-site edge, where business-critical applications run outside the cloud, with no assumptions of reliable connectivity or on-site support. Leveraging the ecosystem has also proved to be an efficient way to explain our own business. What’s complicated to understand on its own, might be easier to understand when laid out next to something the reader already know.

In a category as crowded and as undefined as edge computing, picking your spot and standing by it is how you cut through the noise. And it’s how you make sure your message reaches the right people, in the right way.

These opinions are the authors owns’ and do not represent the official policy of Avassa.