Overcoming the Second Application Challenge in Edge Computing

I spend my days thinking about the value edge-applications can bring to my users and business, but I had not given any thought about the implications of not having a platform for them. But now it strikes me as important.

Many enterprises have already deployed applications at the edge, often in traditional IoT scenarios tied to vendor-specific hardware. But as edge computing matures, strategies are evolving. Newer approaches, such as cloud-out (pushing cloud applications to edge sites) and edge-in (building applications at the edge, integrated with cloud services), are gaining traction.

What’s clear across the board? The edge is no longer a future concept – it’s delivering real value now.

However, in our conversations with IT leaders, a recurring theme has emerged: the biggest hurdle isn’t the first edge application – it’s what comes next.

This is what we call the second application challenge in edge computing, and it’s reshaping how enterprises think about scale, integration, and long-term operations across the distributed edge.

Scaling Pitfalls: The Second Application Challenge in Edge Computing

As enterprises push more functionality to the distributed edge, deploying containerized applications closer to users and machines becomes a strategic necessity. But early wins can obscure long-term operational pitfalls — especially when moving from a single deployment to many. That’s where the second edge application challenge emerges.

Why the First Edge Application Feels Like a Win

For software-defined enterprises, innovation speed is everything. The expectation is simple: move at the speed of software, across all environments, including the distributed edge.

That’s why many organizations start their edge journey by launching a single, self-contained application. It’s a low-friction way to prove the value of edge computing internally, deliver a quick win, and gain operational experience close to the edge.

What Changes When You Scale to Multiple Applications

But what feels like early success can quickly become a trap. As soon as you try to scale from one to many edge applications, the challenges begin to show.

Each new application risks becoming its own silo — with its own tooling, lifecycle, and operational processes. This fragmented approach creates application silos at the edge, increasing operational burden, reducing visibility, and making scaling edge applications costly and inefficient. And uncomfortable. Teams are soon juggling redundant maintenance tasks, inconsistent update pipelines, and growing complexity across edge locations.

Defining the Second Edge Application Challenge

This is what we call the second edge application challenge: the failure to prepare for a multi-application environment from day one. It’s an edge computing challenge that sneaks in after the first win, and one that’s difficult to unwind once it’s entrenched.

Ignoring this challenge leads to operational stove-pipes (one per application) and long-term fragility in your edge stack. To truly succeed, teams must think beyond the first application and architect for multi-application scale from the start.

This leads us to a critical piece of the puzzle: how edge vendors are contributing to, or even helping solve, this challenge.

How Application Vendors Risk Limiting Edge Scalability

As edge computing adoption grows, many enterprises remain stuck in a first-application mindset — and so do many of the vendors that serve them. This dynamic has shaped how edge application vendors build and deliver their software, often in ways that limit long-term scalability and interoperability.

Vendor-Centric Edge Stacks Are Still the Norm

In today’s market, it’s common for edge application vendors to ship their applications bundled with proprietary deployment, update, and monitoring tooling — forming a self-contained infrastructure stack. While this can simplify the rollout of a single application, it also locks enterprises into a narrow operational model that doesn’t scale well.

Why Vendors Don’t Prioritize Scalable Infrastructure

For many vendors, the application itself is the core value proposition — not the surrounding infrastructure. As a result, they often build only the bare minimum operational tooling needed to support their specific use case. These tools are usually tightly coupled to their own applications and lack the flexibility to support third-party workloads or broader enterprise requirements.

They meet the basic operational needs but rarely go beyond, leaving enterprises with subpar scalability and integration tools that don’t fit into a larger distributed edge cloud strategy.

The Risk of Lock-In and Poor Interoperability

This vendor-centric approach leads to significant downsides. Enterprises are left managing fragmented stacks that can’t easily host or orchestrate additional applications. There’s no incentive for vendors to make their infrastructure interoperable, and in fact, doing so may increase their own support burden.

The result: limited flexibility, application silos, and high operational overhead. IT teams are forced to either maintain separate toolchains for each vendor or re-architect entirely when trying to scale or introduce new workloads at the edge.

These vendor-driven limitations compound the operational burden enterprises face as they move beyond the first edge application.

The Need for Unified Edge Infrastructure

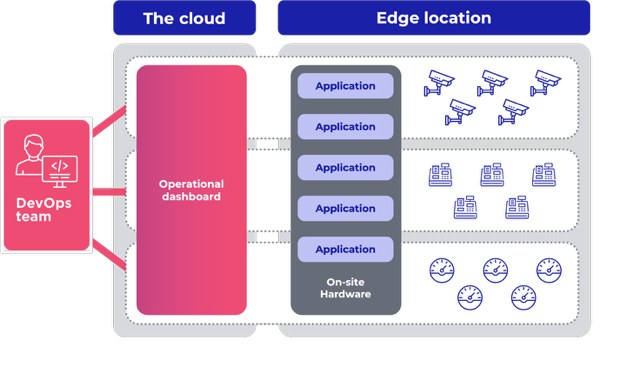

Managing edge environments at scale becomes unsustainable when each application arrives with its own vendor-specific stack. This fragmentation leads to duplicated tooling, inconsistent operations, and poor visibility across sites. A shared infrastructure layer changes that — enabling teams to reuse deployment pipelines, standardize monitoring and security, and reduce operational overhead. By unifying the edge under a common operational model, enterprises gain the control and efficiency needed to scale with confidence.

Scaling Operations Across Siloed Edge Infrastructures

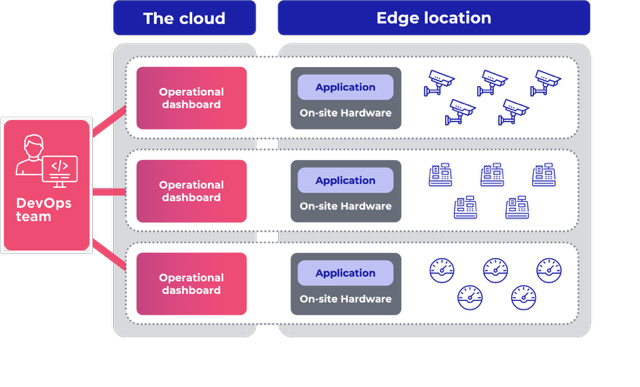

Building consistent application operations on top of vendor-specific, vertically integrated edge stacks is inherently difficult. Each solution introduces its own tooling and approach to deployment, monitoring, observability, and security — creating fragmented operational domains that limit scalability and efficiency.

1. Fragmented Operational Domains at the Edge

When every edge application comes with its own infrastructure, teams end up managing a patchwork of operational environments. These siloed stacks create operational edge challenges by forcing enterprises to adopt different models for each application, with no shared control plane, no unified policies, and no consistent workflows.

2. Minimal Feature Sets Lead to Operational Gaps

Most vendor-provided infrastructure offers only the bare minimum of operational capabilities, lacking mature features for observability at the edge, security, or robust update mechanisms. As the number of deployed applications increases, these gaps prevent teams from evolving best practices, optimizing performance, or maintaining compliance across environments.

This stagnation directly impacts the team’s ability to scale and operate edge applications reliably over time.

3. No Reuse, No Visibility, and High Maintenance Overhead

Because each application stack is vendor-specific, there’s no reuse across platforms. Application teams must reconstruct an operational view from scratch for each vendor, typically using only the least-common denominator of features.

The result: duplicated efforts, reduced visibility, and high maintenance overhead. As more applications are deployed, this fragmented approach makes it nearly impossible to achieve a unified operational view, slowing incident response, inflating costs, and increasing the risk of systemic failures. We like to think about this a little differently.

Enterprises need a better path forward. One that applies a platform approach that enables shared tooling, consistent operations, and scalable edge application management.

How to Solve the Second Edge Application Challenge

Solving the second edge application challenge requires a mindset (and application strategy) shift. Enterprises must stop thinking of the edge as a collection of isolated workloads and start treating it as a scalable, distributed platform-as-a-service (PaaS) designed to support multiple applications across diverse environments.

1. Rethinking the Edge as a Scalable Digital Platform

Rather than viewing the edge as a fragmented environment of standalone applications, organizations should approach it as a unified platform. This shift enables shared services, consistent observability, and unified deployment pipelines across all edge locations. It also lays the foundation for long-term scalability and resilience.

2. Supporting Both Internal and Third-Party Applications

A truly effective edge platform supports both in-house developed applications and commercial third-party workloads, without creating new silos. This requires a shared infrastructure layer that decouples application logic from operational plumbing, making it easier to manage diverse workloads without duplicating effort or tooling.

By breaking away from vendor-specific stacks, enterprises can establish an operational model that’s reusable, extensible, and optimized for evolving business needs.

3. Closing the Cloud-to-Edge Operational Gap

For teams used to deploying in the public cloud, moving to distributed edge environments can feel daunting without proper tooling. The traditional edge model creates friction for cloud-native workflows. But with a PaaS-style approach, the operational gap narrows.

Application teams gain familiar tooling, standardized APIs, and a deployment model that reflects what they already know from cloud environments, but adapted for the operational edge.

To unlock the full value of edge computing, enterprises must treat the edge not as a one-off experiment but as a unified, scalable, multi-application platform built for long-term growth.

What Enterprises Should Look for in a Scalable Edge Platform

To support multi-application edge environments at scale, enterprises need more than a one-off deployment tool, they need a scalable edge platform built for flexibility, security, and operational consistency. Key capabilities to prioritize include:

- Secure remote updates that work reliably across thousands of distributed sites.

- Vendor-neutral tooling to avoid lock-in and support both internal and third-party applications.

- Multi-tenancy to run and isolate multiple applications across teams or business units.

- Cloud-to-edge integrations in deployment, monitoring, and automation workflows, so teams can operate the edge with the same confidence as the cloud.

- Fine-grained access control to enforce role-based permissions at both the application and infrastructure level.

- Declarative configuration management for defining desired state across edge locations, ensuring consistency without manual intervention.

- Comprehensive application lifecycle management, including onboarding, versioning, rollback, and graceful decommissioning.

- Site health awareness that integrates infrastructure and application status, enabling smarter remediation and proactive maintenance.

- Integration with CI/CD pipelines to streamline application delivery from development to distributed edge deployment.

A platform that delivers on these principles enables true edge application operations at scale — with lower cost, less complexity, and faster time-to-value.

LET’S KEEP IN TOUCH

Sign up for our newsletter

We’ll send you occasional emails to keep you posted on updates, feature releases, and event invites, and you can opt out at any time.