Avassa for Edge AI: Seamless Deployment and Scalable Management of Edge AI Solutions

Edge AI brings artificial intelligence closer to where data is created: at the edge. With Avassa’s Edge AI Platform, businesses can deploy, monitor, and manage AI models in distributed environments, unlocking faster decision-making, data privacy compliance, and greater resilience. Move beyond traditional MLOps with a scalable, secure, and automated solution built for real-time edge deployments.

What Is Edge AI and Why It Matters Today

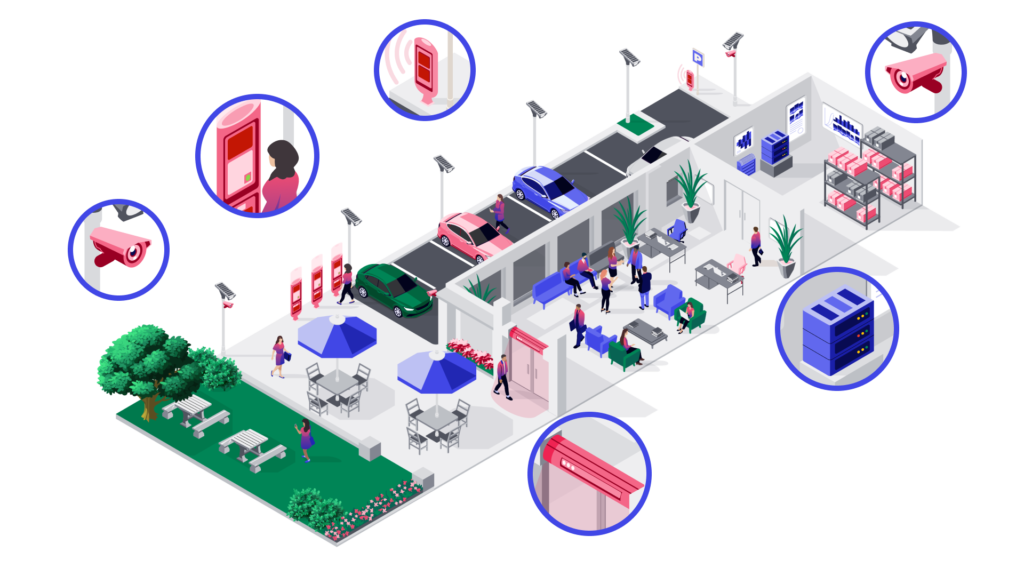

Edge AI refers to running artificial intelligence models directly on edge devices, close to where data is generated, instead of relying solely on centralized cloud computing. This approach enables faster decision-making, reduced bandwidth use, and improved data privacy, key advantages as industries increasingly adopt AI for real-time automation and analytics. The growing demand for instant insights, combined with advances in hardware and containerized workloads, has made Edge AI a major trend. Yet, managing and updating AI applications across hundreds of distributed locations is complex. This is where Avassa’s edge computing platform brings value, simplifying deployment, monitoring, and lifecycle management of Edge AI workloads at scale.

The Challenge — Managing Edge AI Workloads at Scale

The field of Edge AI is growing at an impressively rapid pace, and for good reasons. Combining the potential of edge and AI can lead to unprecedented competitive advantage and data utilization. Today, enterprises need a comprehensive, simple, and secure way of operationalizing their containerized edge AI applications.

AI model deployment in distributed edge and on-premise environments comes with operational challenges. Deploying and managing AI at the edge requires overcoming heterogeneous edge infrastructure, discovering and mounting GPUs to applications, automatically configuring model-serving endpoints, and reporting model drift.

Operational Complexity:

Running AI at the edge brings challenges. Each site might have different hardware and network setups, meaning deployments that work in one location may fail in another. AI models also require frequent updates and retraining to stay accurate, which becomes challenging across hundreds of distributed edge devices. Monitoring and maintaining this fleet over unreliable or low-bandwidth connections demands automation and observability built for edge environments.

Limitations of Traditional Tools:

While MLOps frameworks and Kubernetes provide strong foundations for AI development and cloud computing, they were not designed for large-scale, distributed edge environments. They lack built-in capabilities for local autonomy, secure updates, and real-time visibility across diverse infrastructures.

Managing Edge AI workloads at scale therefore requires an edge computing platform that extends modern DevOps practices to the edge, bridging the gap between AI innovation and reliable operations in the physical world.

Introducing Avassa for Edge AI.

Avassa for Edge AI: Complete Model Deployment & Lifecycle Management

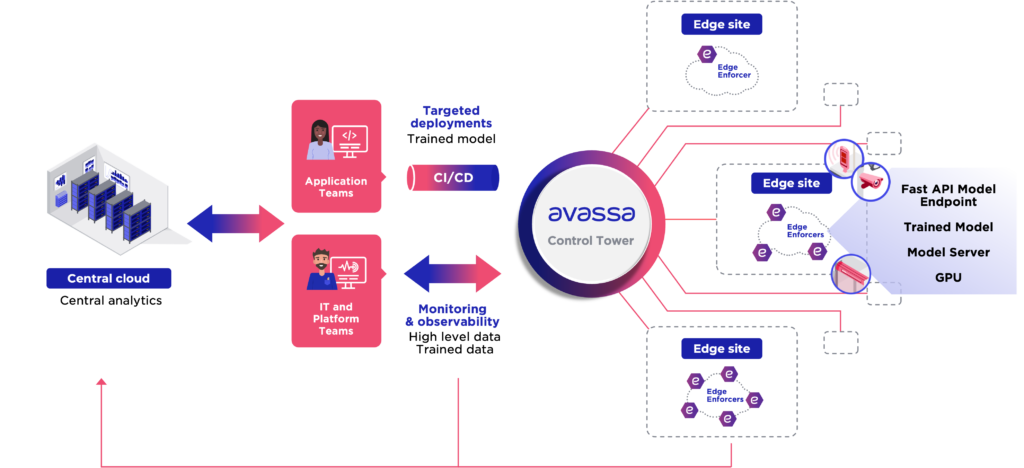

Avassa for Edge AI enables organizations to deploy, monitor, update, and secure containerized AI models across distributed edge environments. With the Avassa Edge Platform, teams can operationalize their AI models in an automated and reliable fashion, turning development outcomes into production-ready deployments across any number of locations.

But Avassa doesn’t just handle deployment; it manages the full AI model lifecycle at the edge. From version control and configuration to continuous updates and monitoring, Avassa ensures your models stay accurate, secure, and high-performing throughout their lifetime. Automation accelerates rollout and maintenance, allowing AI workloads to evolve with your data and business needs.

By using standardized container technology, Avassa integrates seamlessly with existing CI/CD pipelines and development workflows. Each trained model runs as a self-contained unit with all dependencies included, ensuring consistent behavior across diverse industrial edge devices. The result is faster iteration, reduced manual work, and confident operations at scale.

How can organizations manage Edge AI workloads without Kubernetes?

While Kubernetes is an excellent platform for cloud environments, it’s not optimized for managing distributed edge AI workloads. Its complexity in setup and ongoing management, poor performance in low-connectivity conditions, and difficulty scaling across hundreds of small edge nodes make it impractical at the edge.

Avassa provides Kubernetes-level automation, without the overhead of actually using Kubernetes. Its lightweight architecture is purpose-built for on-site edge nodes, operating reliably whether connected or offline. With centralized visibility and control through the Avassa Control Tower, organizations gain the orchestration power they need to run Edge AI at scale, securely, efficiently, and with ease.

Combined with MLOps tooling of your choice, Avassa for Edge AI unleashes the power of Edge AI. It’s your gateway to seamless deployment and operation of distributed on-site Edge AI applications and trained models.

FREE DOWNLOAD

Case Study: Real-World Avassa for Edge AI Applications

In this case, we’ll look at a case description for the preceding challenges, key drivers, and accomplished benefits of an implementation of Avassa for Edge AI. An equipment provider for production lines embarked on a quest to revolutionize their operations through automation.

Key Features of Avassa for Edge AI

Avassa provides a comprehensive edge AI platform to manage AI at the edge, ensuring smooth deployment and security.

- Automated deployment of Edge AI models and applications. Newly trained models can easily be updated at scale.

- Automatic deployment and configuration of model serving endpoints. Configure ingress networking on the site and deploy any needed API components (e.g.FastAPI).

- Automatic discovery and management of GPUs and devices/sensors. With several hosts at each edge site, easily discover GPUs and external devices like cameras and sensors. The components that require these features will be automatically placed on the corresponding host.

- Complete shrink-wrapped solution for your AI model and edge applications. Most AI models live together with other containers at the edge. Avassa provides a complete solution for managing the them all.

- Application resilience offline. Fault-tolerant clustering of business-critical edge AI applications, independent of internet connectivity. The Avassa edge clusters are fully autonomous and your Edge AI application will survive in case of local failures.

- Edge native telemetry bus. We provide an embedded telemetry bus running at each edge. It simplifies the collection of sensor data and application development efforts to collect, filter, enrich, and aggregate data.

- Observability for your application and site health respectively. Get real-time insight into the health of the deployed Edge AI applications with built-in tools for trigger model drift thresholds.

- Intrinsic security for sensitive edge data. Edge hosts might be stolen and networks can be sniffed. The Avassa platform protects all application data and network traffic.

Edge AI Architecture: The Avassa Blueprint

An efficient Edge AI toolkit should be designed to make the lifecycle management of applications and models a breeze. An Edge AI toolkit should contain:

- Your favorite AI/ML software toolkit

- An automated CI/CD pipeline to build the AI models as containers

- Edge infrastructure that won’t hold you back, and the good thing is that you can pick your favourite software and edge infrastructure for the above

- An edge container orchestration solution to manage the edge sites and automatically deploy model containers and components to the edge.

Yes, you guessed it: Avassa for Edge AI.

With the Avassa platform you deploy you Edge AI Applications and models to distributed sites. The sites can have heteregenous infrastructure; mix of Intel and ARM and different capabilities. The Edge Enforcer will then manage your Edge Applications at each edge site and schedule on hosts with the relevant capabilities, GPUs and devices. On sites with several hosts, the Edge Enforcer can make sure your Edge AI application can self heal even without connectivity to the central cloud.

Build intelligent edge applications based on composition of containers for various purposes like protocol adaptors, ML libraries, data analytics etc. The Edge Enforcer also enables edge site telemetry management , local storage and processing. It also manages forward of enriched and filtered data to the cloud including edge caching and managing of connectivity issues.

What is Edge AI model management?

Edge AI model management is the process of deploying, updating, monitoring, and maintaining AI models running on distributed edge devices close to where data is produced. It’s essential for keeping models accurate, secure, and aligned with changing data and operational conditions across sites. Without proper management, AI performance quickly degrades, limiting real-world impact. Avassa simplifies this by automating the entire model lifecycle, from deployment to updates and observability, ensuring consistent, reliable Edge AI operations at scale.

Give it a go! Request a free trial today to start using Avassa for Edge AI on you own Edge AI applications and models.

Edge AI Implementations: Industry Use Cases

| Industry | Use Case | Description |

| 🏭 Manufacturing | Predictive Maintenance | Detect equipment issues before failure to reduce downtime and improve efficiency. |

| 🏥 Healthcare | AI-Powered Diagnostics | Deploy diagnostic models at the edge to enable faster, localized decision-making. |

| 🛒 Retail | Smart Customer Analytics | Analyze shopper behavior in real time to optimize layouts and drive sales. |

| 🚗 Autonomous Vehicles | Real-Time AI Decision-Making | Process data locally for split-second decisions in autonomous navigation. |

| 🌆 Smart Cities | Traffic & Surveillance AI | Monitor and manage urban infrastructure with low-latency, on-site processing. |

Avassa for Edge AI Demos: See it in Action

In this short demo, we show how to lifecycle manage Edge AI models at the edge.

Avassa for Edge AI Solution Walkthrough

GPU Management for AI at the Edge

Frequently Asked Questions

Resources

How is MQTT Used at the Edge? Deploying Lightweight Messaging at Scale

As data volumes continue to grow at the device edge, sending all data to the cloud is no longer feasible. Increasingly large amounts of data are generated at edge locations,…

Edge Computing: 5 Years of Navigating a Category In the Making

The first five years of Avassa have been anything but predictable, because the edge computing market hasn’t been very predictable itself. When we started back in 2020, there was no…

Rethinking the Cloud-First Mandate: Why Modern Enterprises Are Rebalancing Towards On-Prem and Edge

This article is cowritten by Stefan Wallin with Avassa and Cristian Klein with Elastisys. From an information security perspective, 2025 started rough. First, we had power and communication cable cuts…

Avassa in NVIDIA Inception

We are proud members of NVIDIAs Inception Program. Learn more about the program here.

HEAR IT FROM A CUSTOMER