Experimenting with autonomous self-healing edge sites

In this blog post, I’ll experiment a bit with autonomous self-healing edge sites and how they are crucial for a stellar edge infrastructure.

Why are autonomous self-healing edge sites important?

The motivations for edge projects are in many cases latency, privacy, network bandwidth optimization, and cost. But we see another major driver. You need to run your business even if there is a network issue. Functions for personal safety, intrusion detection, industrial automation, point of sales, etc need to run in order to guarantee business availability. Still, we see many edge solutions lacking local site cluster mechanisms and assuming connectivity to the cloud in order to function.

The Avassa edge clusters are unique in two primary ways versus similar products:

- Many edge solutions depend on the central component to be available to take rescheduling or healing actions on the site.

- Many solutions are also lacking a cluster mechanism for the edge, each edge host lives in isolation in those cases.

In this blog, I will show how you easily can set up an Avassa cluster with local VMs on your laptop. With that setup, I will show different cluster scenarios to illustrate Avassa’s principles.

Create a site with the clustered edge hosts

I will use multipass.io to easily set up Ubuntu VMs. You can use any VM environment or “real” Ubuntu hosts. First, create three similar VMs and give them proper names:

$ multipass launch --name cluster-host-1 --cpus 2 --disk 10G --mem 4G

% multipass launch --name cluster-host-2 --cpus 2 --disk 10G --mem 4G

% multipass launch --name cluster-host-3 --cpus 2 --disk 10G --mem 4G

This gives you three Ubuntu of decent size to run some applications.

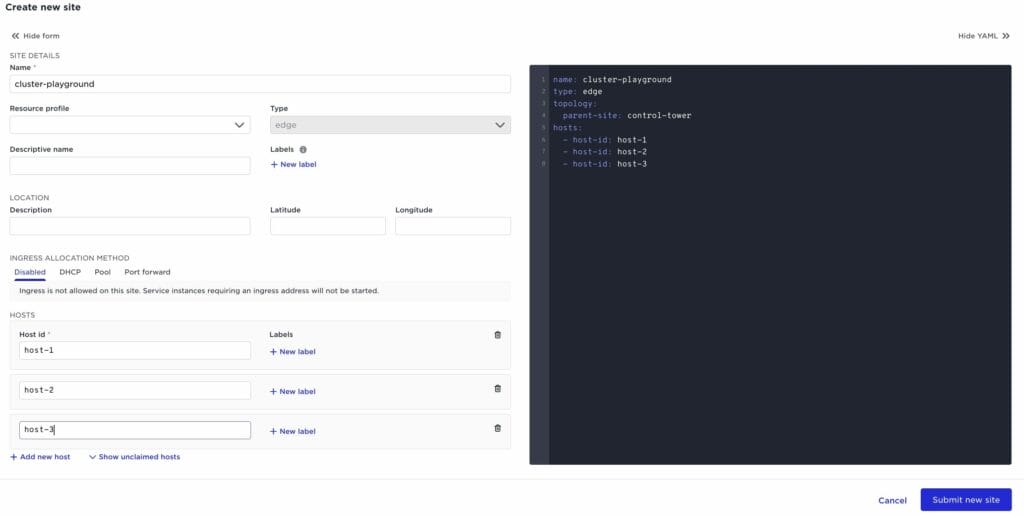

Prepare your site in Control Tower. In this case, we will enter user-friendly host ids which we will pass to the installer on each host above:

This is an example of the pre-provisioning of the hosts. You can perform the site creation in reverse order if you so prefer. See more details on this in our guide for creating sites.

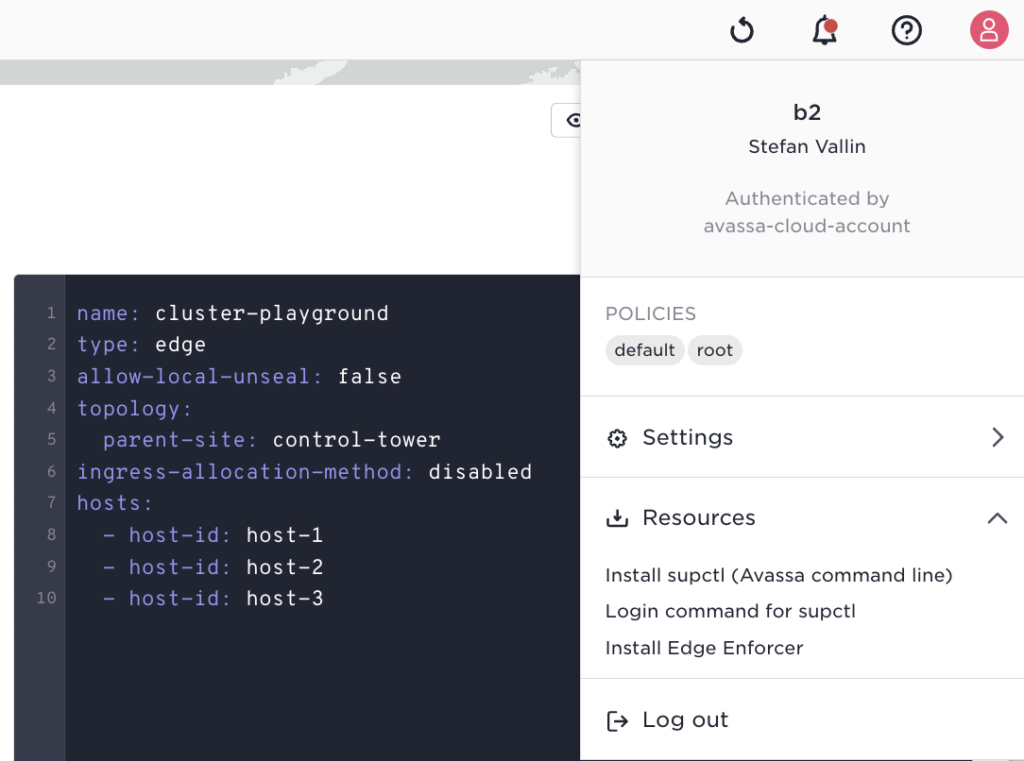

Next get the installer for Edge Enforcer, click the user icon, and pick the “Install Edge Enforcer” item:

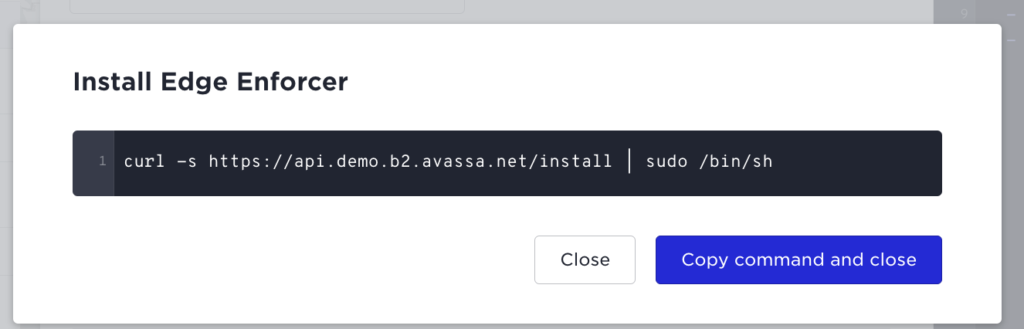

This will give you the install command:

Enter each VM and perform the installation of Edge Enforcer on each. We will pass an extra parameter to the installer to feed the host id rather than the installer to pick the serial board id:

$ multipass shell cluster-host-1

In the shell paste the command above but add an extra parameter -s -- --host-id host-1 before hitting enter:

$ curl -s <https://api.demo.b2.avassa.net/install> | sudo /bin/sh -- --host-id host-1

Perform the same operation in each VM instance but with host-ids: host-2, and host-3.

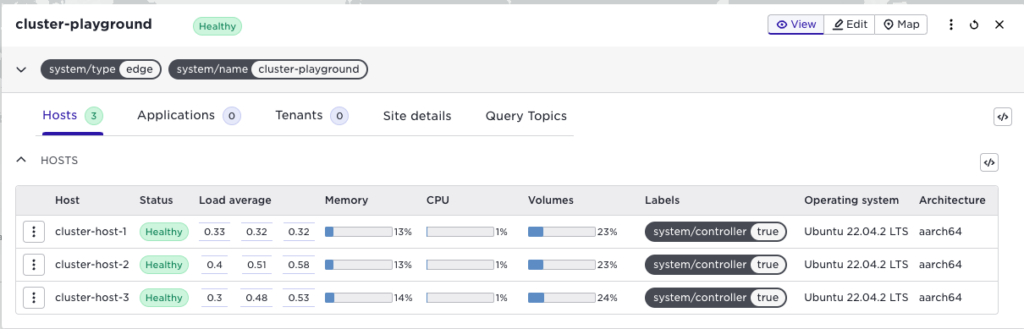

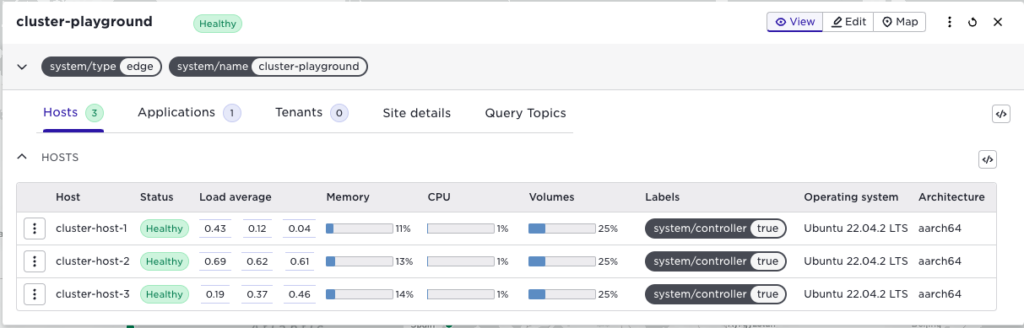

If you now turn back to the Control Tower you will see your site become “healthy”.

Scenarios

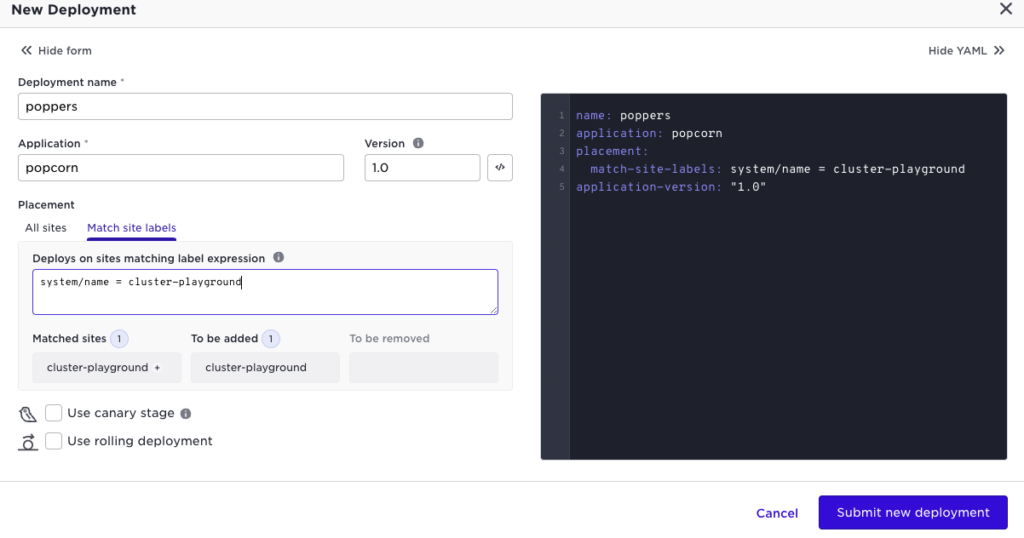

Start with deploying an application, you can pick the simple popcorn application from the first tutorial in our documentation. Deploy it to your newly created cluster:

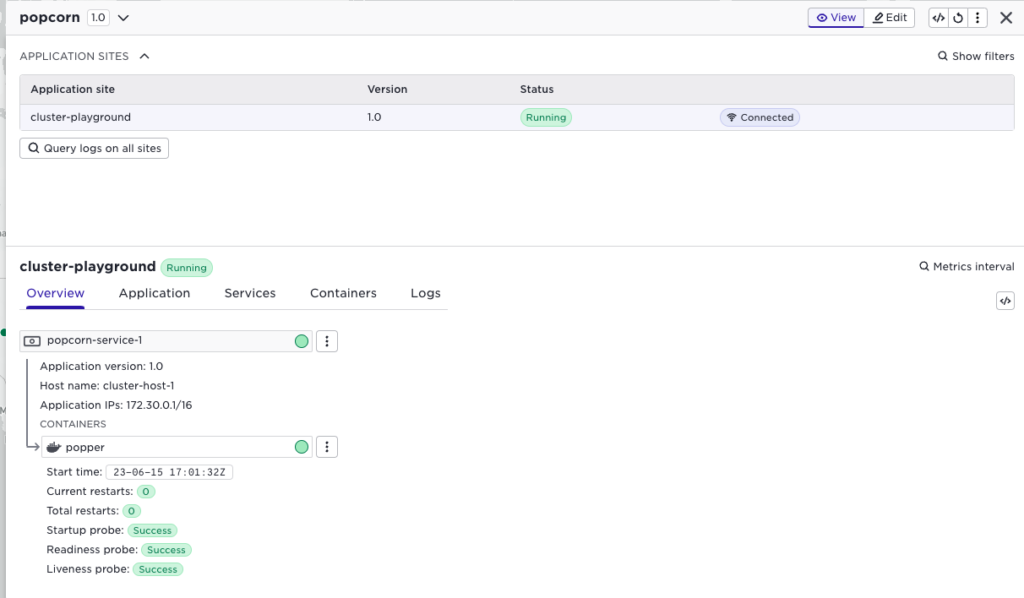

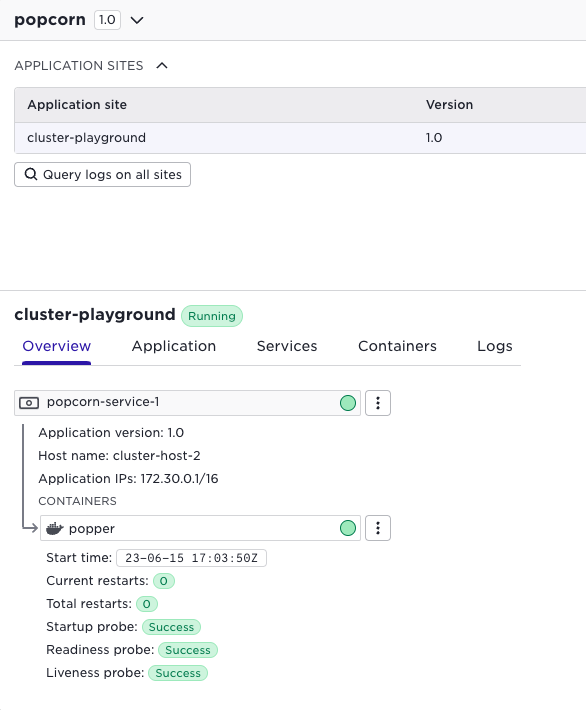

Navigate to the application, you can inspect which host it got scheduled to, cluster-host-1:

Let us stop cluster-host-1, by stopping the VM:

$ multipass stop cluster-host-1

What is important to understand going forward is that everything that takes place at the cluster is autonomous for that site. The Edge Enforcers on the host communicate, the state is replicated as well as images. This means that no communication is needed back to Control Tower or other central components to reschedule an application to another host. Furthermore, these decisions are taken locally by the Edge Enforcer, Control Tower is not involved. This means that the scenario I will demonstrate now works exactly the same even though the site is disconnected. (Try this by just disabling the network to the laptop with your VMs). But I will keep the connection up in order to illustrate what happens using the Control Tower UI.

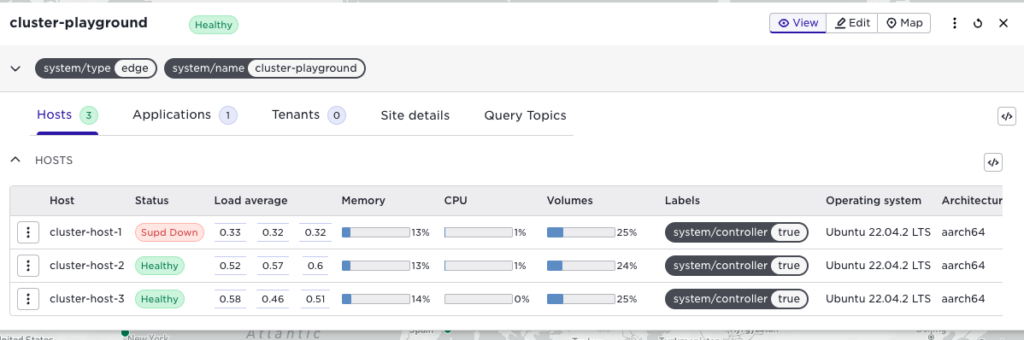

If you now check the site status you will see the host going down:

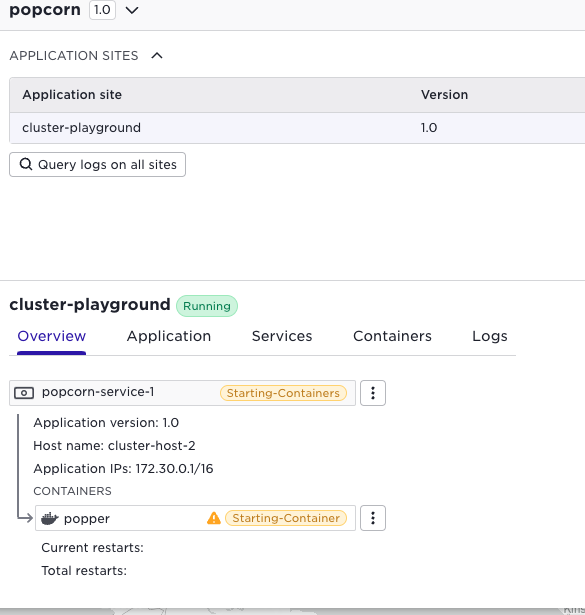

Switch to the application view, you will now see that it is starting on host 2.

And soon it is up and running:

You can inspect the scenario directly on the individual hosts by monitoring the output from docker ps. Below you can see popcorn running on the host (start the shell on the corresponding host):

$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fa10bde341f9 0b82b649b0dc "/bin/sh -c $EXECUTA…" 3 hours ago Up 3 hours b2.popcorn.popper-1.popper

cd66ea306ed9 avassa/nsctrl "/pause" 3 hours ago Up 3 hours b2.popcorn.popper-1.nsctrl

c73a5d5a5bb2 120de119c68a "/docker-entrypoint.…" 17 hours ago Up 17 hours supd

In the same way, the logs from the edge enforcer container supd will show events related to the scenario, the log snippet below shows when host-2 is picking up the image to take over the application:

$ sudo docker logs supd

...

<NOTICE> 2023-06-15 17:01:29.762504Z cluster-playground-002 mona_server:309 <0.1377.0>

new_image [b2]avassa-public/movie-theaters-demo/kettle-popper-manager@sha256:ba1d912df807e4e36a57517df51be8c1cf3e42ca04a97bcefa02586ba81896f8 from supd@cluster-playground-003

<INFO> 2023-06-15 17:01:30.979742Z cluster-playground-002 mona_server:490 <0.21015.1>

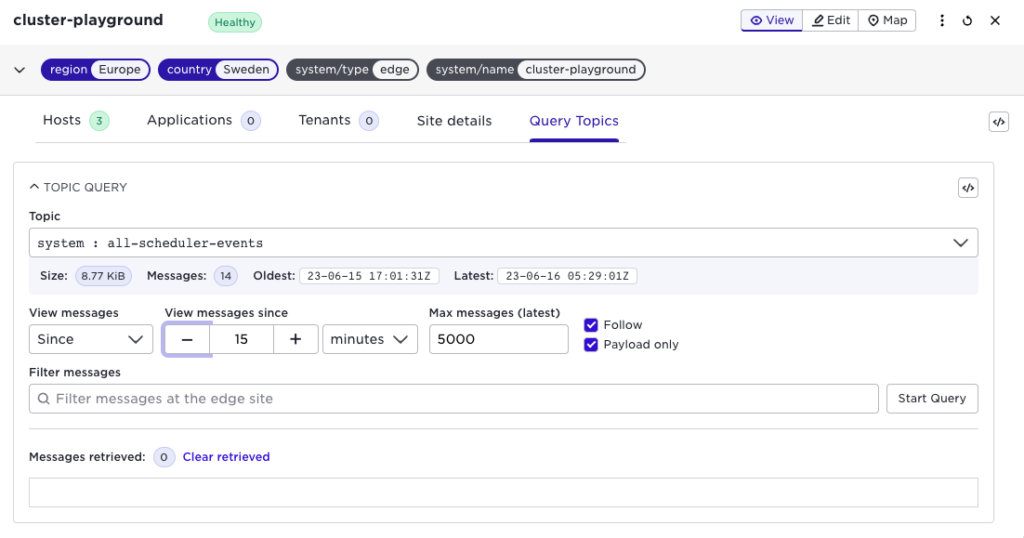

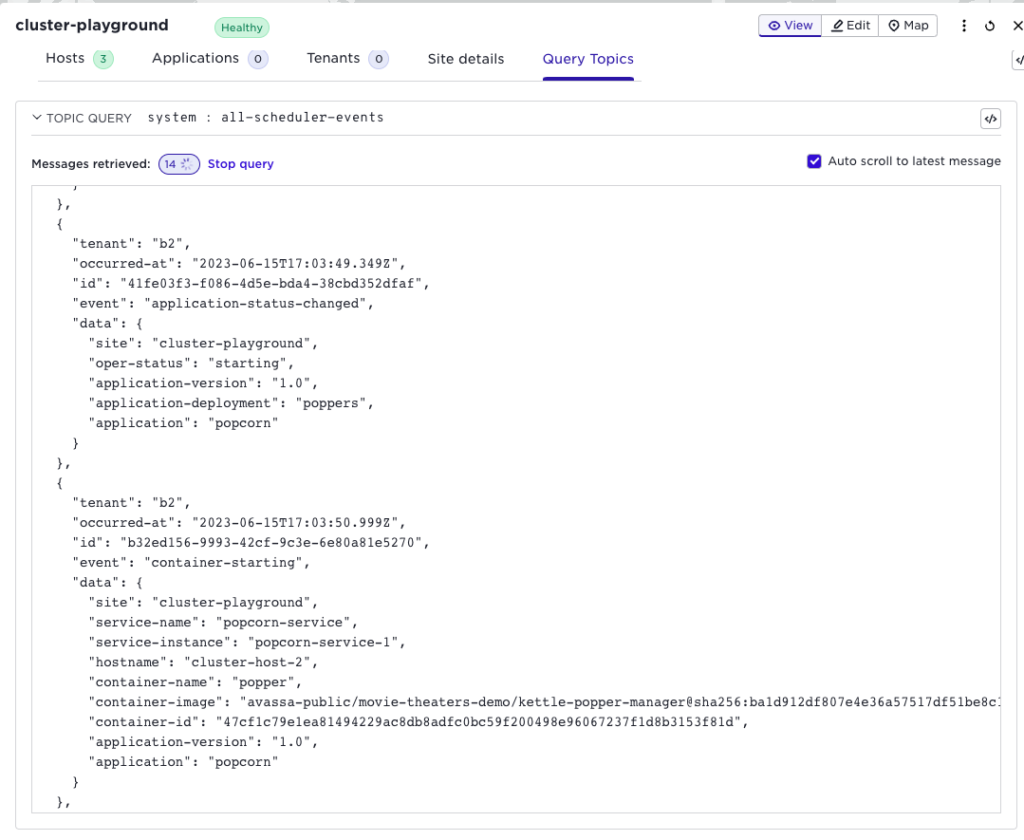

The scheduler publishes all events on a Volga topic. You can inspect that topic to see the scheduler events for this scenario:

And here you see events that the application is starting on cluster-host-2

We can restart the stopped host:

$ multipass start cluster-host-1

Conclusion

In this blog, I showed you how you easily could experiment with Avassa clusters. We did show that applications will self-heal at the site in case of host issues.